A guide for parents on how online radicalization actually works

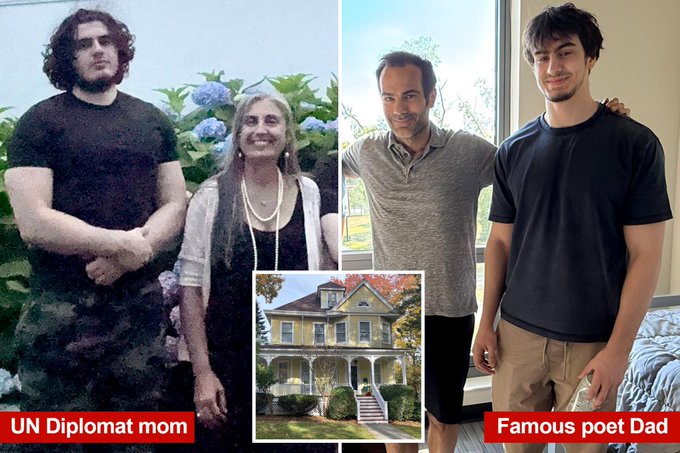

The arrests in New Jersey and Michigan read like a dark comedy written by someone who doesn't understand American privilege. Eight young men from good families, some living in million-dollar homes, some running legitimate online businesses, decided to kill for ISIS.

One of them was the son of a United Nations Women's Entrepreneurship program director. Another's father is a celebrated Iranian-American poet.

These weren't kids from broken homes or desperate circumstances. They had everything America promises your kid they'll get if they work hard and succeed.

So what happened?

Last November, federal prosecutors announced they had stopped what they called a "major ISIS-linked terror plot." The suspects, ranging in age from 19 to 20, came from places like Montclair, New Jersey and Dearborn, Michigan.

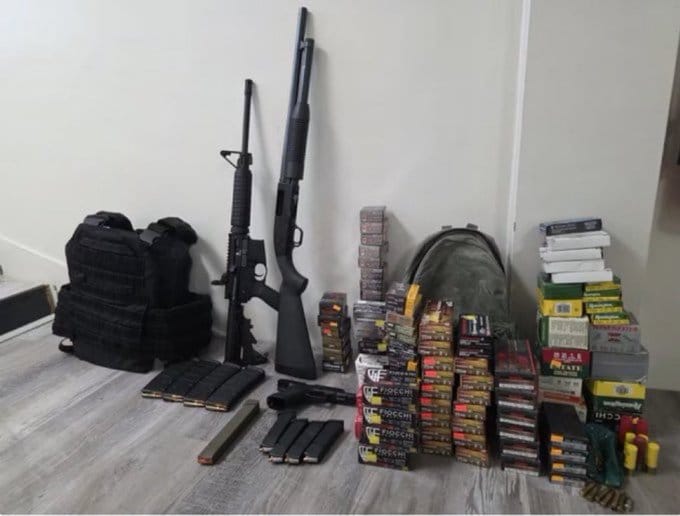

One suspect, Nasser, was earning tens of thousands of dollars monthly through fraudulent Fortnite schemes.

HE WAS ALSO STOCKPILING GUNS.

Another, Sedarat, lived in the kind of Victorian mansion that appears in glossy magazines about suburban New Jersey. Jimenez-Guzel played varsity football. Sedarat wrestled.

FOR NEW YORK'S JEWISH COMMUNITY, THIS IS NO LONGER ABSTRACT.

These aren't hypothetical threats. These are actual young men with actual weapons, arrested before they could execute actual attacks on American soil.

The hate speech flowing through online platforms isn't just offensive anymore. It's directly connected to operational planning. And it's happening at such velocity that even federal law enforcement is struggling to keep pace.

The Jewish community in New York, already reeling from a decade of rising antisemitism on campuses and in the streets, now watches algorithms on platforms designed for teenagers actively radicalize kids toward violence.

THIS IS THE CURRENT MOMENT. THIS IS HAPPENING NOW.

They posed with AR-15s. They discussed bombing bridges. They joked with each other about the FBI monitoring their chats while simultaneously planning to kill Americans.

What's unsettling isn't that these kids got radicalized. It's how normal their path was.

Their radicalization didn't happen in a madrassa in Pakistan or at a mosque under surveillance.

IT HAPPENED ON APPS YOUR CHILD USES EVERY DAY.

It happened in Discord servers. It happened through encrypted Telegram chats. It happened because the internet makes it trivially easy to find people who share your worst impulses, amplify them, and then turn them into action.

One of the suspects' mothers called law enforcement when she noticed her son's behavior changing. Authorities had already been investigating. Parents had confiscated weapons. Nothing stopped it.

THE PULL TOWARD VIOLENT JIHAD, FACILITATED ENTIRELY THROUGH CONSUMER TECHNOLOGY, WAS STRONGER THAN PARENTAL INTERVENTION OR FEDERAL ATTENTION.

The second warning came two days ago in Brooklyn. Michael Chkhikvishvili, a 22-year-old neo-Nazi from Georgia, pleaded guilty to soliciting someone to dress as Santa Claus and hand out poison candy to Jewish children and children of racial minorities on New Year's Eve.

He called himself "Commander Butcher." He led something called the "Maniac Murder Cult," a primarily Russian and Ukrainian outfit that operates internationally with cells in the United States.

NEW 🚨Leader of “Maniac Murder Cult” who calls himself ‘Commander Butcher’ takes Plea in Solicitation of Hate Crimes & Mass Violence Case. 23-year-old Michail Chkhikvishvili took a plea deal in the EDNY where the government recommended between 168-210 months(17.5 years) for 2… pic.twitter.com/4wqyIMxFNx

— Lauren Conlin (@conlin_lauren) November 17, 2025

He wasn't arrested because he seemed dangerous. He was arrested because he tried to recruit an FBI undercover agent into the plot. The agent was posing online, and Chkhikvishvili never knew the difference.

THESE CASES AREN'T ANOMALIES. THEY'RE DATA POINTS IN A MUCH LARGER PATTERN THAT MOST PARENTS DON'T UNDERSTAND.

Your child isn't safe from radicalization because they have college savings. They're not safe because they have a good school district or parents who care.

THE MECHANISM THAT RADICALIZES THEM OPERATES OUTSIDE OF THOSE PROTECTIVE FACTORS ENTIRELY.

How Dark Feeds Work Against Your Kid

TikTok and similar platforms operate on a fundamental principle that has nothing to do with what's healthy for young people: engagement.

An algorithm's only loyalty is to keeping users on the platform. If violent extremist content keeps your child scrolling, the algorithm will serve it. If hate speech drives engagement, the algorithm will recommend more.

"Dark feeds" on TikTok are the algorithmic equivalent of a gutter system that collects runoff. They're not organized by any central authority. Instead, they emerge organically when the platform's algorithm begins serving increasingly extreme content to users who interact with edge-case material.

A teenager watching clips criticizing immigration policy might gradually be served content that moves from policy criticism to explicit racial ideology. Someone interested in men's rights content gets recommended material from male supremacist communities.

The progression is gradual, personalized, and invisible to parents.

THE ALGORITHM DOESN'T CARE THAT IT'S CREATING EXTREMISTS. THE ALGORITHM CARES THAT ENGAGEMENT IS UP.

TikTok's recommendation system works in real time, analyzing microsecond interactions to predict what will make a user stay on the app longest. Researchers studying TikTok's algorithm have found that it can push users toward increasingly extreme content within weeks. Some teenagers report being served extremist content within days of creating accounts.

Hate speech on these platforms isn't incidental. It's a feature.

Slurs, conspiracy theories, and violent rhetoric perform extraordinarily well in the engagement metrics that dictate how algorithms work. A TikTok video promoting anti-Semitic conspiracy theories will be seen by more people than a video discussing Middle East policy because hate content is more emotionally volatile. Volatile content makes people comment, share, and return to the platform.

The radicalization happens in increments that are impossible for a parent to catch. Your child isn't shown material labeled "extremist content." They're shown content that looks indigenous to TikTok culture. In-jokes about identity politics. Exaggerated critiques of mainstream institutions. Slightly edgier takes on issues the algorithm knows they care about.

By the time the content clearly crosses into explicit ideological radicalization, your child has already built a community of online friends who share the radical beliefs.

That community is real to them. The people they talk to every night feel more genuine than family members they see at dinner. These online friends understand them. They validate their sense that society is hostile or unjust or corrupt. They provide something most teenagers are desperate for: belonging.

THIS IS WHERE IT BECOMES DANGEROUS.

The Mechanism They All Share

What's striking about both the ISIS recruits from New Jersey and Michigan and the neo-Nazi poison plot is that they operated through the same basic mechanism.

A young person encountered extremist content online. The algorithm served them more of it. They found a community of people who shared their beliefs. That community reinforced and escalated those beliefs. Eventually, the line between fantasy and reality blurred enough that planning actual violence seemed reasonable.

None of them had to leave their homes. None of them had to encounter anything that looked like organized recruitment.

THE SYSTEM BUILT ITSELF THROUGH ALGORITHMS AND HUMAN PSYCHOLOGY. THE YOUNG PERSON'S OWN PATTERNS OF INTEREST FED THE MACHINE THAT RADICALIZED THEM.

The Montclair suspects from the ISIS case used encrypted apps specifically designed to evade law enforcement. But they found those apps because other users recommended them. They found those users because algorithms had already sorted them into communities of increasingly extreme thinkers. The technical tools of radicalization (encrypted chats, dark web access, crypto payments) were taught to them by the radicalization process itself, not the other way around.

Chkhikvishvili's poison plot was the same structure. He operated primarily through Telegram, a messaging app that's perfectly legal and widely used. He recruited through the same social media networks. He escalated through the same process of isolation and community building.

What Parents Actually Need to Know Right Now

Your child's comfort level, their material circumstances, their access to good schools and family support. None of it is irrelevant, but none of it stops algorithmic radicalization.

THE PROCESS THAT RECRUITS A KID IN A MILLION-DOLLAR HOME TO VIOLENT EXTREMISM DOESN'T CARE ABOUT YOUR ANNUAL INCOME OR YOUR PARENTING PHILOSOPHY.

It's automated. It's continuous. It's relentless. It's designed by engineers whose entire job is maximizing engagement, not protecting young people from radicalization.

THIS ISN'T A FUTURE PROBLEM. THIS IS HAPPENING IN REAL TIME.

While you're reading this, algorithms are serving extreme content to millions of teenagers. The Fortnite botter stockpiling weapons in Michigan wasn't an outlier. He was the system working exactly as designed. A teenager found a way to make money through gaming. The algorithm connected him with others. That community radicalized him. He bought guns. He was arrested days before he could use them.

Blocking TikTok won't solve this because the mechanism exists on multiple platforms. YouTube's algorithm has the same structure. Instagram's algorithm functions identically. Even Discord, designed as a tool for gaming communities, uses engagement metrics that inadvertently amplify extremist content.

The first thing parents need to understand is that they're fighting an economic system, not a few bad actors. TikTok's parent company ByteDance profits directly from engagement. The more time your child spends on the app, the more valuable their attention is to advertisers.

IF EXTREME CONTENT KEEPS THEM ENGAGED, EXTREME CONTENT WILL BE RECOMMENDED.

There's no conspiracy here. It's just capitalism applied to human behavior in a system that has no guardrails.

The second thing is that radicalization feels normal to the person it's happening to. Your child won't come to you and say, "Mom, I've been gradually exposed to increasingly extreme content and I think I'm becoming an extremist." They'll have new friends online. Those friends will seem smart and genuine. The community will feel welcoming and inclusive. The ideology will feel like enlightenment, like finally understanding how the world actually works.

By the time you notice something is wrong, the community attachment is usually already deep.

The third thing is that you can't monitor your way out of this. Helicopter parenting that tries to track every online interaction will destroy your relationship with your child without actually stopping the radicalization. The mechanism is too sophisticated and too constant. A motivated teenager who wants to hide their online activity from parents can always find a way.

The goal isn't to become omniscient about your child's internet habits. The goal is to maintain enough connection that your child still trusts you enough to come to you when they're confused or disturbed by what they're seeing.

THAT'S THE REAL PROTECTION.

What Schools and Administrators Should Be Doing

Universities and high schools have been largely absent from this conversation. They've focused on in-person speech codes and debate moderations while the actual radicalization mechanism operates entirely online, outside their jurisdiction.

THIS IS A MISTAKE.

Schools should be teaching digital literacy that specifically addresses how algorithmic radicalization works. Not in the abstract, but as actual mechanics. How do recommendation systems work? How do they prioritize engagement over safety? What does it mean when you notice content getting progressively more extreme? Why does an online community feel more real to a teenager than their actual community?

Schools should also be staffing their counseling departments with people who understand online radicalization. The warning signs often show up with school counselors or teachers before they show up at home. A kid suddenly becoming more isolated. Consuming themselves with ideology. Dismissing previous friends as not understanding the "real truth." These are observable patterns, but they're only useful if the person observing them knows what they're looking at.

This is different from monitoring speech or trying to police what students believe. It's about understanding how the system that radicalizes young people actually functions and being positioned to intervene early.

The Uncomfortable Question

Chkhikvishvili is facing 14 to 17 years in federal prison. The eight ISIS recruits from New Jersey and Michigan face up to 20 years each. These are genuine young men who will spend most of their twenties and thirties in federal custody because an algorithm recommended increasingly extreme content and they found a community that validated their worst impulses.

The question isn't whether your kid is smart enough or well-adjusted enough to resist radicalization. The ISIS recruits were both.

THE QUESTION IS WHETHER THE ECONOMIC MODEL THAT POWERS THE APPS THEY USE EVERY DAY CAN BE ALTERED TO NOT ACCELERATE YOUNG PEOPLE TOWARD VIOLENT EXTREMISM.

That's ultimately a political question. It involves regulation, accountability, and forcing technology companies to internalize costs they currently pass on to society. But it's also an immediate question. Not a future question.

ALGORITHMS ARE RADICALIZING TEENAGERS RIGHT NOW. IN YOUR STATE. IN YOUR CITY. POSSIBLY IN YOUR HOME.

What you can do is understand how it works, maintain a relationship with your child that allows for honest conversation about what they're seeing online, and push your child's school and your elected representatives to take this seriously.

Schools cannot continue to ignore what's happening on platforms. They need to teach digital literacy that specifically addresses radicalization. They need counselors trained to recognize the warning signs. They need to understand that the threat isn't coming from some external extremist organization.

IT'S BEING MANUFACTURED IN REAL TIME BY ALGORITHMS YOUR CHILD ACCESSES FROM THEIR BEDROOM.

The eight young men arrested in the ISIS plot had parents who cared about them. Parents who tried to intervene. Parents who confiscated weapons and called law enforcement when they saw the warning signs. It wasn't enough.

THE ALGORITHM WAS MORE POWERFUL THAN PARENTAL INTERVENTION.

That's the conversation we need to have now. Not about whether your kid is a good kid. Your kid probably is. The conversation is about whether the system that distributes content to young people online is fundamentally designed in a way that accelerates radicalization.

Right now, the answer is yes.

And the Jewish community in New York, and communities across this country, is living with the consequences every single day.

Lawsuit AlphaLawsuit Alpha

Lawsuit AlphaLawsuit Alpha

Lawsuit AlphaLawsuit Alpha

Lawsuit AlphaLawsuit Alpha

Global Breaking News You Can Use.Art Vendeley

Global Breaking News You Can Use.Art Vendeley